Reading Time: 5 minutes

Reading Time: 5 minutesIntroduction

Employees who start and end their careers in a single business organization rarely come by. Employees often switch jobs after a few years of service in any given organization. Although the reasons may vary on a case-to-case basis, these switches could be either voluntary attrition, or organization-driven.

That being said, abrupt voluntary attrition is no longer a simple matter – it can seriously impact business growth KPIs. For example, frequent and unplanned hiring cycles are costly, and hamper operational productivity in businesses. Global HR teams have been looking for solutions for this phenomenon and have devised several programs and perks, exploring the potential of artificial intelligence and business intelligence to essentially retain employees. But it has not been adequate, or more accurately, precise in achieving its intended purpose.

Leveraging AI technology to understand employee attrition could be the way forward. Forecasting attrition trends could help organizations make meaningful changes in the present, enhancing retention in the future.

In this document, we will explore how enterprises can build resilient HR departments, capable of understanding and mitigating employee attrition issues.

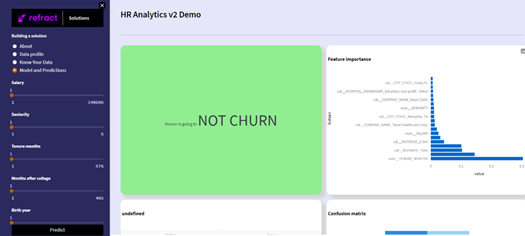

At Fosfor, we have built Refract, a solution where we can understand, and accurately predict future churn events. An easy-to-use solution with visually consumable insights, business users can easily use Refract to understand the underlying attrition trends, and solve for future attrition possibilities, in the present.

But to do this, we had to first understand the causes of attrition or churn in any given organization.

While every employee’s reason for departing the organization may vary, there are several common factors that often contribute to employees’ voluntary churn. Employees who are planning to leave an organization are often looking for:

- Higher compensation

- Career advancement

- Improved work-life balance

- Better organizational culture and values

- Better recognition in an organization

- Easier commute or relocation

Although the organization’s culture, and lack of recognition for the employee play crucial roles in employee attrition, these factors are very difficult to be collected and quantified. So, in this case study, we will try to study drivers such as compensation, work-life balance, and commute, in correlation with demographics such as age, sex, etc.

An American healthcare service provider solved attrition issues with Fosfor’s Refract.

Leading American healthcare providers are facing serious personnel shortages issues due to rising attrition rates. Nursing staff, who play a pivotal role in delivering necessary healthcare to the patients at the grassroots level, is at the core of this phenomenon.

A few key challenges that organizations must overcome to mitigate churn:

- Inability to accurately analyze the termination history data and respective reasons for attrition.

- Not having an appropriate understanding of external factors like industry headwinds, global macro trends, industry sentiment data, and their possible impact on the HR data.

A lack of intelligence mechanisms to build and maintain data pipelines that can present the data in easy-to-consume formats. Inability to understand the history of events and data, and look into the future to know how to pivot.

The Refract Solution:

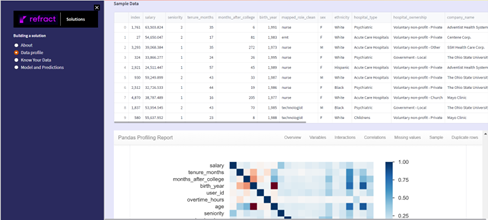

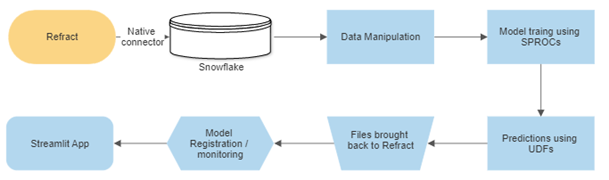

Refract provides users with an enterprise AI platform to track and consume the model performance. The data manipulation and modeling happen in the Snowflake environment, ensuring data security. The Streamlit applications are built and hosted on top of Refract, providing users with an interface to consume insights generated from the model directly through dynamic visualization widgets.

Key benefits of using Refract:

- Powerful, flexible Data Science pipelines built through Snowpark for Python.

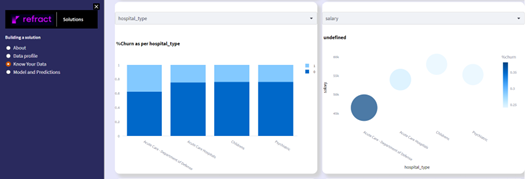

- Quick visualizations of attrition facts & figures through Streamlit (quicker turnaround for everyday visualizations)

- Interactive storytelling with a quick app-style deployment of notebooks

Solution Workflow

Decoding The Data to Insights Journey –

To understand and be able to predict employee attrition we have specific data points:

- Employee demographics such as year of birth, sex, distance from the workplace, type of degree, tenure in the organization, and ethnicity along with their salary details.

- Organizational details such as the type of hospital, the type of hospital ownership, and the US state the hospital belongs to.

Data for how much overtime each employee records. The churn variable, which indicates the employees who have churned.

Step 1: We have used Refract’s integrated Jupyter notebooks, which connect to the Snowflake instance, to start a new session. As Snowpark API requires Python 3.8, Refract also provides users with the flexibility to choose the relevant version of Python for use.

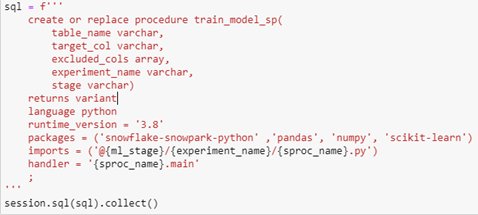

Step 2: We created a data pre-processing pipeline for simple manipulations such as missing value imputations, data scaling, and one-hot-encoding. We followed up with a model training pipeline consisting of a Random Forest algorithm and grid search for finding the best parameters. This entire code is written in a separate .py file in the form of Python functions, which is then sent to the Snowflake stage that you will be using. This training function is then registered as a stored procedure using Snowpark SQL.

Using the above code, you can send any file to your Snowflake stage.

This will register the model training pipelines as a stored procedure. Remember to mention the Python modules you will be using in your training pipeline, and if your pipeline has multiple functions, specify the main function as the handler of the SPROC (stored procedure(s)).

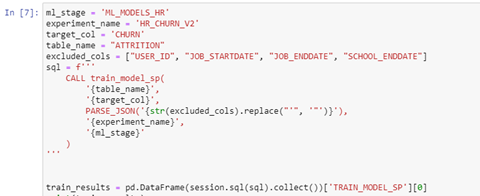

Using the above code, you can trigger the training stored procedure, while mentioning the variables that you don’t want to use in your training.

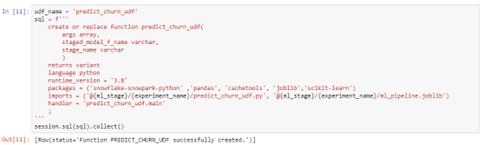

Step 3: Like step 2, you can create a separate file defining your prediction functions, sending that file to your Snowflake stage, and then registering the functions to Snowflake using the below code.

Here, we will be registering the prediction file as a Snowpark UDF (user-defined function), almost having the same imports and handler.

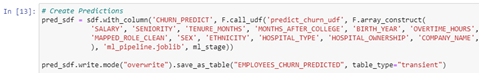

We can call the UDF and trigger the prediction on an entire Snowflake table(sdf).

Step 4: Once the model training and predictions are done, we bring brought the model back to Refract, along with all the necessary files for scoring the model and registered the model in Refract for monitoring and consumption.