Reading Time: 4 minutes

Reading Time: 4 minutesAs Data Science and Machine Learning practitioners, we often face the challenge of finding solutions to complex problems. One powerful artificial intelligence platform that can help speed up the process is the use of Generative Pretrained Transformer 3 (GPT-3) language model.

What is GPT?

GPT stands for “Generative Pre-trained Transformer.” It is a type of Generative AI language model that uses deep learning techniques to generate human-like text. GPT models are trained on vast amounts of text data and can learn to generate natural-sounding language in a variety of contexts. GPT models have been used for a wide range of natural language processing tasks, including text generation, question answering, and language translation. They have also been used to create chatbots and other conversational artificial intelligence applications.

GPT Model Series.

OpenAI is an artificial intelligence research laboratory consisting of a team of engineers and researchers dedicated to advancing the field of artificial intelligence in a safe and responsible manner. They have developed several cutting-edge machine learning models, including the GPT series of language models and few of them are listed below.

| GPT-3 | The GPT-3 (Generative Pre-trained Transformer 3) language model is trained on 175 billion parameters. It can generate high-quality natural language text that is often indistinguishable from human-written text, and can be used for a wide range of tasks, from text completion and summarization to question-answering and language translation. |

| GPT-2 | GPT-2 (Generative Pre-trained Transformer 2) is an earlier version of the GPT model, with 1.5 billion parameters. It is still a highly capable language model and can be used for many of the same tasks as GPT-3, albeit with somewhat less accuracy and fluency. |

| Codex | Codex is an artificial intelligence language model that is specifically designed for generating code, and is trained on a large corpus of open-source code repositories. It can be used to generate code snippets, complete functions, and even entire programs in a wide range of programming languages. |

| DALL-E | DALL-E is a language model that is trained to generate images from textual descriptions, using a combination of text-to-image synthesis and image generation techniques. It can be used to create custom visualizations and artwork based on textual input. |

Here are some of the ways which can help speed up your DS/ML experiments and implementations using GPT solutions:

1) Generating text for documentation and reports: Writing documentation and reports can be a time-consuming task. With a generative AI platform like GPT-3, we can generate high-quality, natural language text that can be used as a starting point for our documentation and reports. For example, we can generate summaries of our data, descriptions of our models, or explanations of our results.

2) Generating code: GPT-3 can generate code snippets based on natural language descriptions of the task we are trying to accomplish. This can be especially useful for tasks such as data cleaning, feature engineering, and model tuning. We can use the generated code as a starting point and modify it as necessary to fit our specific use case.

3) Generating ideas: GPT-3 can also be used to generate new ideas for Data Science and Machine Learning projects. We can input a prompt describing the problem we are trying to solve or the data we are working with, and GPT-3 can generate suggestions for models, algorithms, or approaches we might want to consider.

GPT inside notebooks

To make it easier to use GPT-3 in Python, there are several libraries available that wrap the API and provide a simple interface for using the language model. One such library is the OpenAI library. With this library, we can use GPT-3 to quickly generate text, code, or other content to assist in our Data Science and workflows during ML operations.

In this blog, we’ll explore how to use Python magic functions to access GPT and enhance the user experience.

Magic functions

Python’s magic functions are a way to enhance the functionality of Python environments like Jupyter Notebook by allowing users to create shortcuts or special commands. These functions are called “magic” because they are not typical Python functions, but rather built-in functions that perform specific actions or set certain configurations. Magic functions can be used for a variety of tasks, such as timing code execution, debugging, profiling, and integrating with external libraries or APIs. They can also be used to create custom shortcuts or commands for repetitive tasks, making coding and data analysis more efficient and streamlined.

How to use GPT Inside notebook

Here are step-by-step instructions on how to define a custom Python magic function using OpenAI and integrate it into Jupyter Notebook:

Install the OpenAI API and dependencies

To use OpenAI in Python, you’ll need to install the OpenAI API and its dependencies. You can do this by running the following command in your terminal or command prompt:

pip install openai

Authenticate with the OpenAI API

Before you can use the OpenAI API in your Python code, you’ll need to authenticate with your API key. You can obtain your API key by creating an account on the OpenAI website and following the instructions provided. Once you have your API key, you can authenticate by adding the following code to your Python script:

import openai

openai.api_key = “YOUR_API_KEY”

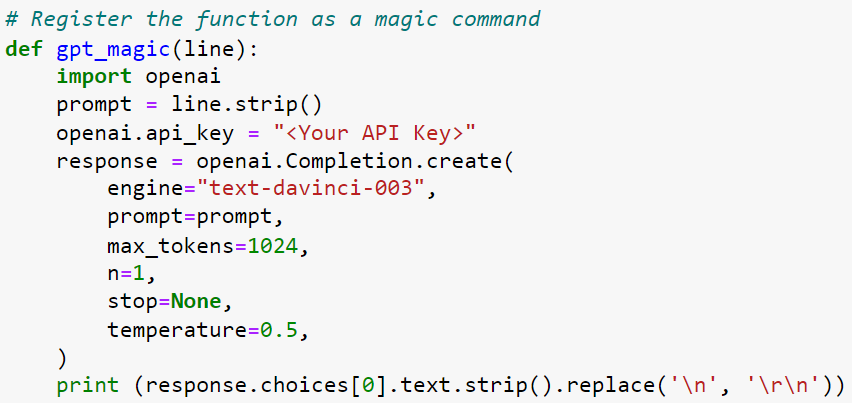

Define the custom magic function

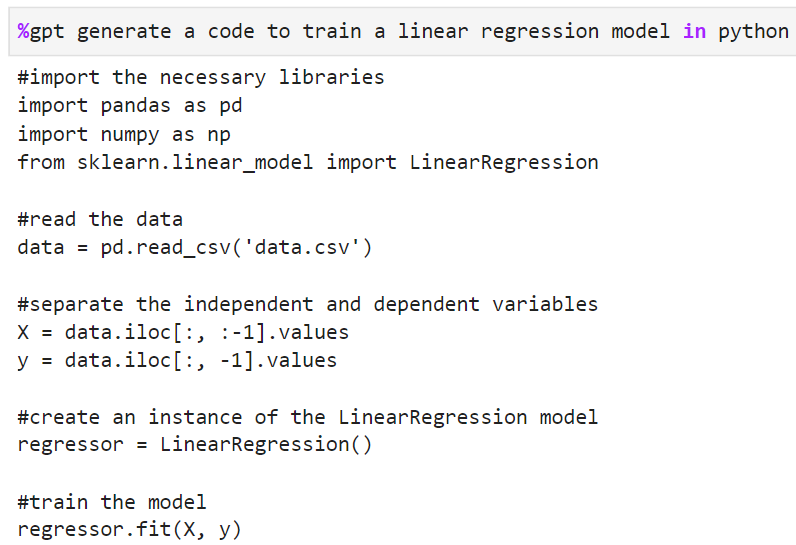

In this example, we’ll create a line magic function called GPT3 that generates text using the OpenAI GPT-3 language model. Here’s the code:

In this code, we’re using the openai.Completion method to generate text using the GPT-3 language model, with a maximum of 1024 tokens. The line argument passed to the function will be used as the prompt for generating the text.