Reading Time: 2 minutes

Reading Time: 2 minutesTechnical debt refers to the cost of any shortcuts or sub-optimal solutions taken in the development process that can result in difficulties and increased costs in future maintenance and software upgrades. The term “technical debt” was coined by Ward Cunningham in 1992. He likened the process of accumulating technical debt to taking out a loan. Just as with a financial loan, technical debt may accrue interest over time and require eventual repayment, with the added costs increasing exponentially. This blog focuses on the technical debt incurred by products used in developing Machine Learning (ML) models. These faulty tools prioritize speed and efficiency over long-term maintainability. Such shortcuts may lead to decreased accuracy, increased technical debt in machine learning, and higher costs in future maintenance and updates.

The ML journey

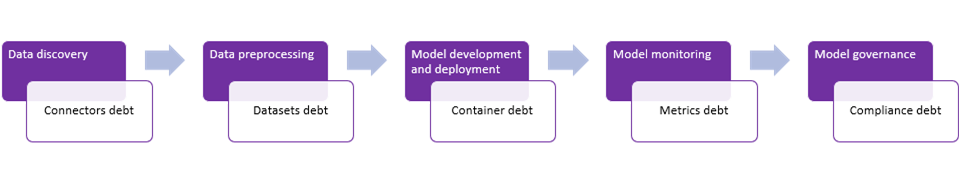

As shown in Figure 1, from end-to-end, the machine learning model development journey involves:

- Data discovery

- Data preprocessing

- Model development and deployment

- Model monitoring

- Model governance

Figure 1: The ML model development journey

This journey keeps the results of the model accurate and relevant. At each step of the model journey, there is an extremely high probability of incurring technical debt if ML products do not enable the right integration choice.

Let’s look at the stages of the ML model development journey and learn where and how technical debt grows.

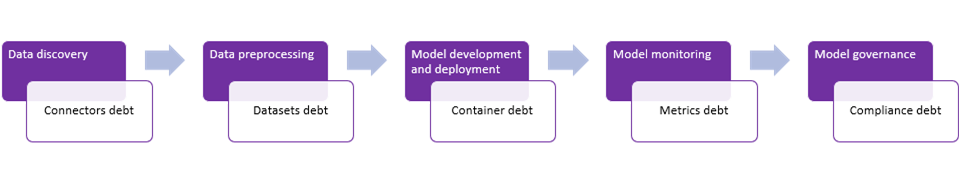

Figure 2: Types of debt across the ML model development journey

As demonstrated in Figure 2, various technical debts appear in relation to each stage of the ML model development journey. Let’s dive into each of them.

Data discovery

Most organizations are already in their data processing journey or have successfully consolidated their data sources through cloud migrations, enterprise data lakes, data meshes, or data catalogs. As such, ML products often support various connectors to all kinds of data sources available in the market. Unfortunately, this diversity ends up incurring a debt to maintain the latest versions of required drivers and their ever-evolving connection string parameters.

This connectors debt occurs due to connectivity integration issues with diverse data sources and the increased effort required to keep the connectors updated with the latest releases.

Data preprocessing

Data pre-processing quality is a deep-rooted issue in every organization for which no single solution exists. A lot of time is spent cleaning up data sets and preprocessing the data in the ML journey. The goal of all of this preprocessing is to create a dataset ready for utilization in building models. Additionally, dataset accumulation occurs when multiple versions of datasets are created to maintain intermediate stages of data preprocessing. This also causes a debt of storage and maintenance of unnecessary or underutilized datasets.

This datasets debt in machine learning occurs due to the abundance of intermediate datasets created during the data preparation process.

Model development and deployment

Various Integrated Development Environments (IDEs) are available for data scientists to develop models. As such, ML products should maintain the support of popular IDEs that enable development in trending programming languages such as Python, R, SAS etc. The maintenance of these IDEs results in container debt. Multiple versions of IDEs need to be maintained with support for multiple versions of underlying flavors of programming.

This container debt occurs due to the overhead of supporting a variety of IDEs and their differing versions, multiple ML frameworks, and an ever-growing list of open-source packages.

Model monitoring

Monitoring artifacts required for model evaluation varies for different types of models. For example, model metrics vary for regression, binary classification, and multi-class classifications. In addition, several types of drift might occur, resulting in a deviation of model quality. To continuously evaluate the models in an automated way and alert the users in case of deviating model quality, ML products accrue technical debt in terms of complexity and maintenance of evolving graphs and artifacts to support accurate monitoring.

This metrics debt occurs due to the complexity created by displaying many metrics for each model within a DataOps platform. This can confuse the user and result in a technical debt from calculating and maintaining accuracy on all metrics daily.

Model governance

Governance requirements vary based on the organization, domain, and geography. As such, a complex governance framework needs to be established for an ML product. This framework supports and caters to governance requirements without getting into the loop of customizing solutions for each customer. This requirement results in a complex web of roles, responsibilities and reports that must be maintained per organizational and governance requirements.

This compliance debt occurs in machine learning due to the inability to create instant reports needed to meet various regulatory, audit, administrative or compliance requirements.

Debt identification and avoidance with Refract

The product development process should consider the probability of the occurrence of these technical debts in machine learning and plan to avoid them as much as possible. At Refract, technical debt is one of the metrics we track to ensure that product users are not burdened with these ML product issues.

Solving connectors debt

Connectors debt can be reduced or mitigated by limiting connectors’ support to the most popular and widely used connectors available in the market. It can also be solved by partnering with products that are pioneers in providing connectors to all data sources. Supporting connectors in-house would mean tracking the connector versions regularly and keeping the drivers updated with the latest version.

Refract manages all data connectors in-house, monitors the changes being released on each connector, and promptly rolls out the changes to the product. This helps our customers to stay updated with the latest driver configurations.

Solving dataset debt

Fosfor Refract solves dataset debt by using versioning. This allows the user to easily view the previous versions of the dataset and review the various steps involved in each data preparation process. This strategy helps the data scientists understand the data preparation process incrementally and choose the ideal dataset for building models. Refract manages writing data back to its source and push-down processing to reduce technical debt.

Solving container debt

Refract solves container debt by managing the notebooks for data scientists in a containerized mode. This allows users to add newer versions of IDEs as new containers and simultaneously plan for the timely decommissioning of older versions of IDEs. This allows its users to work on the latest and secure IDEs without worrying about the effort required to maintain them.

Solving metrics debt

Fosfor Refract solves metrics debt by carefully choosing the most relevant metrics for a particular model. Refract also reduces the complexity for the user while accurately performing required choices in the background to show the metrics relevant to a particular model.

Solving compliance debt

Users spend a lot of time figuring out how to extract information that can be presented. Fosfor Refract solves this Compliance Debt by managing compliance and audit requirements. It provides easy options to extract model documentation and user audit information.

Conclusion

Overall, the probability of technical debt in machine learning products is higher if not well thought through during the design process. There may even be more debt classifications than those we have discussed. Still, by understanding and addressing technical debt at each stage of the end-to-end ML journey, you can set your ML projects up for success!

Reading Time: 2 minutes

Reading Time: 2 minutes